Prompt Engineering: Tools, Best Practices, and Techniques

Writing effective prompts has become a crucial skill for developers working with large language models (LLMs) like ChatGPT. This comprehensive prompt guide will walk you through the essentials of prompt engineering. From basic techniques to advanced strategies, we will introduce you to the best prompt management tools for optimizing your AI prompts, and how to prompt better.

What is Prompt Engineering?

Prompt engineering is the art and science of crafting effective inputs (prompts) to guide AI models toward generating desired outputs. As highlighted in the official prompting guide from OpenAI, well-designed prompts can significantly improve the performance and reliability of AI-generated content.

Why is Prompt Engineering Important?

- Improves AI model performance: Precise prompts help models generate more accurate and relevant responses.

- Reduces errors and hallucinations: Clear instructions minimize the risk of the AI producing incorrect or nonsensical information.

- Improves consistency in outputs: Structured prompts lead to more consistent and reliable results.

- Enables more complex and nuanced tasks: Advanced prompting techniques allow for handling sophisticated tasks that require detailed reasoning.

How to be a Prompt Engineer in 2024: Key Skills and Knowledge

To excel in prompt engineering in the era of Generative AI, developers should:

- Understand the capabilities and limitations of AI models (more on this later).

- Develop strong communication and language skills to craft clear and concise prompts.

- Cultivate creativity for crafting prompts for different models and tasks.

- Stay updated with the latest AI developments and best practices

- Use prompt management tools to organize and optimize prompts.

Comparing OpenAI o1, GPT-4, Gemini, and Claude 3.5 Sonnet: Strengths and Limitations

Understanding the capabilities of different large language models (LLMs) helps you craft effective prompts that leverage each model’s strengths. Here’s a simplified comparison focused on aspects relevant to prompt engineering:

OpenAI o1

Strengths

- Demonstrates advanced reasoning, particularly in complex mathematical and logical tasks.

- Excels at step-by-step problem-solving using chain-of-thought processes.

- Provides accurate and thoughtful responses for challenging questions.

Limitations

- ⚠️ Responses are slower due to detailed reasoning, making quick back-and-forth interactions less practical, putting more emphasis on prompt engineering.

- ⚠️ High computational demands may limit accessibility for some users.

- ⚠️ Might prioritize logical analysis over creativity in some cases.

GPT-4

Strengths

- Excels in understanding and generating text across a wide range of topics.

- Handles complex instructions well, producing detailed and coherent responses.

- Effective at following context and maintaining continuity in conversations.

Limitations

- ⚠️ Knowledge cutoff in September 2021; lacks information on events after that date.

- ⚠️ May occasionally produce incorrect or nonsensical answers (hallucinations).

- ⚠️ Primarily processes text; doesn’t natively handle images or audio without additional tools.

Gemini

Strengths

- Designed as a multimodal model, capable of understanding and generating text, images, and other data types.

- Aims to integrate advanced reasoning and problem-solving skills.

- Expected to offer enhanced capabilities for complex tasks requiring diverse data inputs.

Limitations

- ⚠️ Performance varies depending on the version (e.g., Gemini Pro vs Ultra).

- ⚠️ Can struggle with maintaining consistent persona across long conversations.

- ⚠️ Potential biases in outputs due to some biases in training data.

Claude 3.5 Sonnet

Strengths

- Excels in logical reasoning, analysis, and maintaining consistent persona.

- Highly capable in detailed analysis and explanation of complex topics.

- Focuses on providing helpful and safe responses.

Limitations

- ⚠️ May sometimes refuse to engage with certain topics due to ethical constraints.

- ⚠️ Limited multimodal capabilities compared to some competitors.

- ⚠️ May struggle with tasks requiring real-time information or web access.

Note: These models are continually evolving. For effective prompt engineering, it’s important to understand each model’s unique capabilities and limitations. Tailoring your prompts to play to a model’s strengths can significantly enhance the quality of the AI’s responses.

Prompt Engineering Techniques and Best Practices

1. Be specific and clear

Provide detailed instructions and context to guide the AI’s response.

Example

Poor: "Write about dogs."

Better: "Write a 300-word article about the health benefits of owning a dog, including both physical and mental health aspects."

2. Use structured formats

Organize your prompts with clear sections or steps.

Example

Task: Write a product description

Product: Wireless Bluetooth Headphones

Key Features:

1. 30-hour battery life

2. Active noise cancellation

3. Water-resistant (IPX4)

Tone: Professional and enthusiastic

Length: 150 words

3. Leverage role-playing

Assign a specific role or persona to the AI for more tailored responses.

Example

Act as an experienced data scientist explaining the concept of neural networks to a junior developer. Include an analogy to help illustrate the concept.

4. Implement few-shot learning

Provide examples of desired inputs and outputs to guide the AI’s response.

Example

Convert the following sentences to past tense:

Input: I eat an apple every day.

Output: I ate an apple every day.

Input: She runs five miles each morning.

Output: She ran five miles each morning.

Input: They are studying for their exam.

Output: They were studying for their exam.

5. Use constrained outputs

Specify the desired format or structure of the AI’s response.

Example

Generate a list of 5 book recommendations for someone who enjoys science fiction. Format your response as a numbered list with the book title, author, and a one-sentence description for each recommendation.

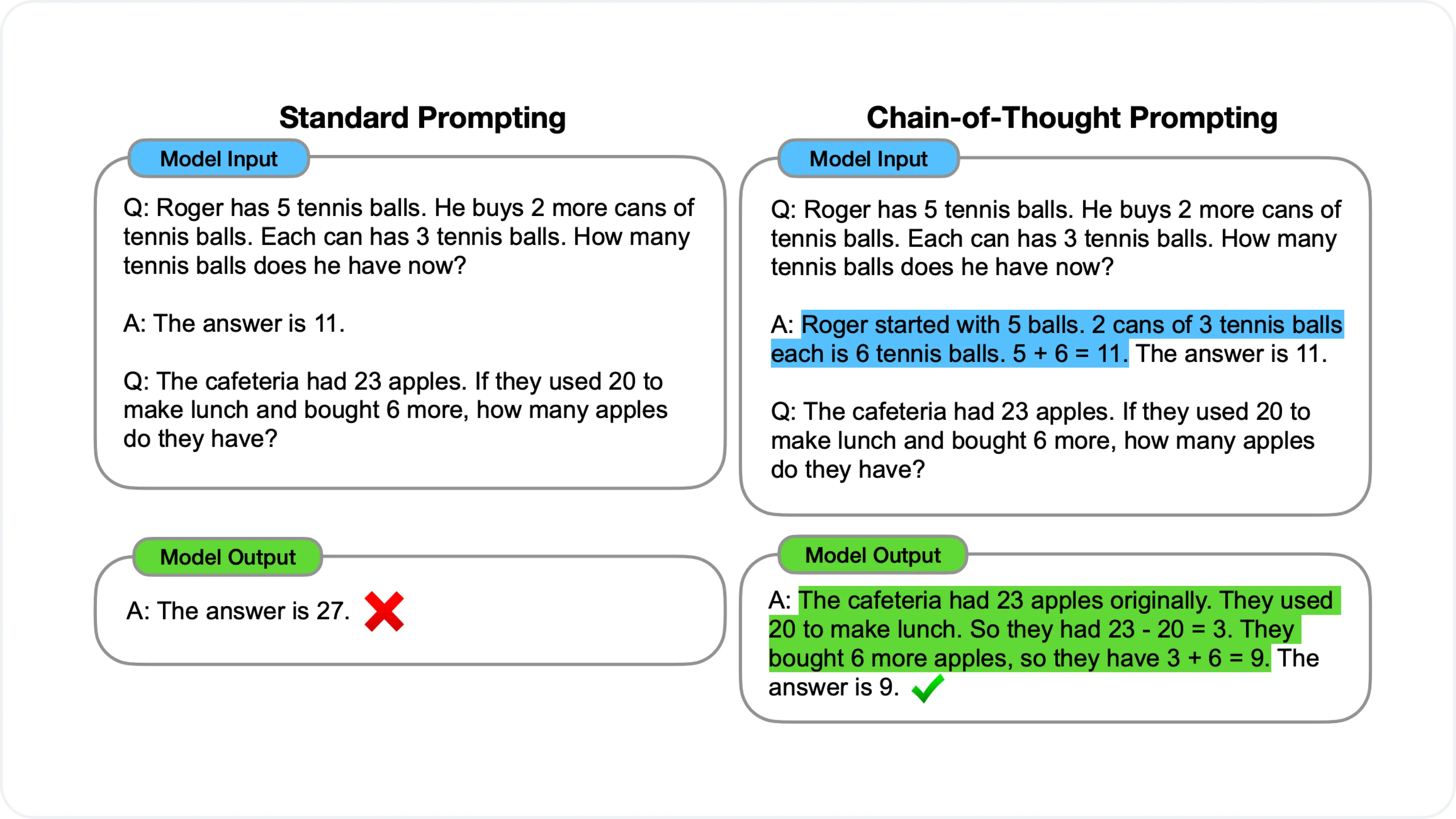

6. Use Chain-of-Thought prompting

Prompt for a series of intermediate reasoning steps can significantly improve the ability of large language models to perform complex reasoning.

Example

Reference: Paper on Chain-of-Thought Prompting Elicits Reasoning in Large Language Models

Reference: Paper on Chain-of-Thought Prompting Elicits Reasoning in Large Language Models

Best Prompt Management Tools

To streamline your prompt engineering workflow and improve your LLM outputs, here are some of the best prompting tools we recommend for your AI application development:

- Helicone: An open-source platform offering comprehensive prompt versioning, optimization, experimentation, and analytics.

- OpenAI Playground: An interactive environment for testing and refining prompts with various GPT models.

- Pezzo: An developer-first AI platform to manage prompts in one place.

- Agenta: An collaborative LLM development platform to collaborate on prompts, compare versions, and easily test them.

- LangChain: A framework for developing applications powered by LLMs, including prompt management features.

Prompt Management: Why It Matters

Effective prompt management is crucial for optimizing AI interactions. Here’s more in depth on what prompt management really is.

Without a dedicated prompt management tool

- ⚠️ Fragmented Prompt Management. Prompts are scattered across documents or codebases, making them hard to track and increasing the risk of errors.

- ⚠️ Limited Analytics. Without analytics, understanding how prompts perform is a guessing game. It’s difficult to optimize prompts and get high-quality responses.

- ⚠️ No Version Control. You have to track prompt changes manually and may overwrite or lose valuable iterations.

- ⚠️ Reduced Collaboration. Team collaboration on prompt development is often done ad-hoc using email or chat. It becomes harder to gather feedback, leading to slower improvement cycles.

Helicone Does It All, And More

- ✅ Automatic Prompt Versioning. Effortlessly track prompt versions and automatically record changes.

- ✅ Prompt Templating & Input Tracking. Maintain a history of old prompts and input/output datasets for each prompt template.

- ✅ Experiments. Run experiments to test and improve your prompts or compare models.

- ✅ Interactive Playground. Debug and test prompts in a sandbox environment (currently supports ChatGPT and many other model providers).

What you might find useful

- Guide: How to set up Prompts in Helicone

- Guide: Debugging LLM applications with Playground

- Guide: How to run LLM prompt Experiments

- Read: What is Prompt Management?

Prompt Engineering Tips for Success

- Iterate and refine: Don’t expect perfection on the first try. Continuously improve your prompts based on AI responses.

- Be concise yet comprehensive: Provide enough detail without being overwhelming.

- Avoid ambiguity: Use clear language to minimize misunderstandings.

- Test across models: Different models may interpret prompts differently. Testing ensures broader effectiveness.

- Document your prompts: Keep a record for future reference and to track what works best.

- Study successful prompts: Analyze prompts that produce high-quality outputs to understand what makes them effective.

Mastering the Art of Prompt Engineering

As AI continues to evolve, knowing how to write great prompts is becoming a key skill for developers and non-technical team members. Just like learning any language, the more you practice, the better you get.

Use this guide as your prompt design playbook and experiment with various prompt engineering techniques. While you’re at it, try out one of the prompt management tools mentioned above. It will help you track which prompts work and which don’t.

Remember, there’s no “perfect prompt” — becoming proficient in prompt engineering is an iterative process. Keep an eye on the latest prompt engineering tips, experiment with different types of prompt engineering, and use data-driven insights to fine-tune your approach.